Secure Your AI Workflow Using Local Tokenisation

Secure your AI workflow with local tokenisation. PaigeSafe is a lightweight tool perfect for small agencies and freelancers handling sensitive client data in ChatGPT, Claude and other AI tools.

If you've spent any time at all using cloud-based LLMs like ChatGPT or Claude for client work, you've probably had that voice in the back of your head kick in: "Should I really be pasting this into a chat?" I'm sure that moment of hesitation is all too familiar for those who have started to integrate AI into work workflows.

Every day, many of us paste sensitive content into AI tools—client data, business strategies, internal documents—often without really thinking about where that information ends up. That data potentially becomes part of training sets, risking leaks by cropping up in future chats with other users. For freelancers and small agencies handling confidential client work, Large Language Models (LLMs) create a real dilemma. They're too useful to avoid but carefully sanitising content is a real chore.

Enterprises solve this with expensive solutions which are overkill and far too expensive for the rest of us. Those who want to take advantage of LLMs have been left with carefully reading through documents and running manual search and replace for names and numbers. This is tedious, error-prone and still stands a high likelihood of data leaks. Unfortunately, taking unnecessary risks with client data, spending ages on manual anonymisation, or avoiding AI tools altogether when working with sensitive information is no longer a good option to remain competitive.

Introducing the PaigeSafe Document Security Tool

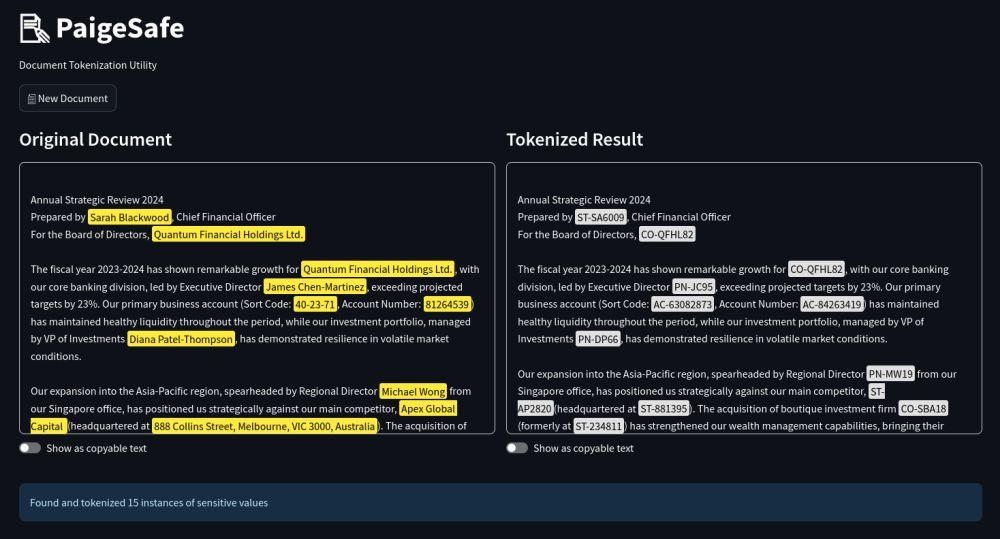

PaigeSafe is a document security tool that helps protect your confidential information when using Large Language Models (LLMs) like ChatGPT and Claude. It uses the process of tokenisation by replacing sensitive data with non-sensitive placeholders. We originally built it as an in-house tool because we faced these exact same challenges. As a small team, we needed something that just worked without the expensive licenses and high learning curve.

PaigeSafe is currently in the prototyping stage to test if there is demand for this type of utility. It offers basic functionality, and the code lacks robust error checking. However, since it is intended to be run locally, there is minimal risk to your documents. All it does is offer a convenient way to search and replace text before you paste or upload sensitive text to LLMs. I regularly use it to sanitise my own documents.

Uses and Limitations

PaigeSafe does not try to offer an enterprise solution for those who need to meet strict compliance regulations. Here's where it fits in the document security landscape:

- Perfect for: Freelancers, small agencies, independent developers

- Good for: Regular business documents, client communications, project data

- Not for: Banking systems, medical records, top-secret government files

PaigeSafe is a lightweight tool that helps you avoid accidentally exposing sensitive information to AI models. If you're handling typical client work like website data, marketing plans, business strategies, and project specs, this solution is for you. It's perfect for those, "I need to run this past ChatGPT but shouldn't share the client's name" moments. Or when you want to analyze customer feedback without exposing individual identities.

If you work for a financial institution, healthcare provider, or government contractor, this solutions of course will not be for you.

Where to Find it

The tool is built using Python and the Streamlit framework but if you use Docker, it can be easily installed by pulling the PaigeSafe image from Docker Hub. For more information, please visit the dedicated site at paigesafe.com.

Remember that it is still very much an early prototype but more useful features will follow. Please send feedback to [email protected]

How to install PaigeSafe

Find out how to install the prototype application by following the instructions on the PaigeSafe website.

You may also like

Drupal 7 End of Life: Why WordPress is the Best Migration Option for Lower Maintenance Sites

Drupal 7 support ends January 2025. Discover why WordPress is the cost-effective, user-friendly CMS for small agencies, freelancers, and businesses.

Still Alive: A Micro Agency's 20 Year Journey

This article will be the first in a series where I'll share how Artificial Intelligence has reshaped how we operate at Another Cup of Coffee.

How To Set Up Drupal 7 Docker Containers for Migration Projects

Learn how Docker is a valuable tool for Drupal 7 end of life migrations. In this post, I'll give a step-by-step guide to setting up a Drupal 7 container for your migration project.